TDLR: In the many conversations we’re having about ChatGPT–in the press and with each other–we tend to focus on strengths and limitations of the generative AI technology itself, largely because there’s so much to say about both. But there’s another discussion that we should be having just as vociferously and in parallel–that of the new mindset that we, the human users, need to adopt when dealing with AI bots like ChatGPT. This is a mindset that should be informed by the system’s strengths and weaknesses, but one that is fundamentally about our own understanding of interacting with external agents. Though interacting with ChatGPT simultaneously feels like interacting with a person and with a computer, these interactions are fundamentally different from the ways we are used to dealing with either. What should become our guiding principles here? My recent interactions with the system, in which ChatGPT fabricated all its sources then admitted as such, suggest that we need to be on guard when it comes to how generative AI differentiates its own generations from objective information.

Searching for Knowledge with ChatGPT

Ironically this story starts with me considering knowledge itself. Recently I have been conducting research in education, looking into the role of American institutions of higher education in identifying what they want their graduates to know. As part of this research, I decided to look at how university mission statements talk about knowledge specifically. After doing a number of Google searches looking at major university mission statements, I thought that an Artificial Intelligence (AI) bot like ChatGPT might be a helpful tool for research assistance. Could AI quickly gather mission statements from universities and synthesize common features in the way a human research assistant might? I decided to find out. Spoiler alert: things went alarmingly wrong, though not at first.

I’ll describe my ChatGPT conversation below. You can read my entire chat transcript with ChatGPT here.

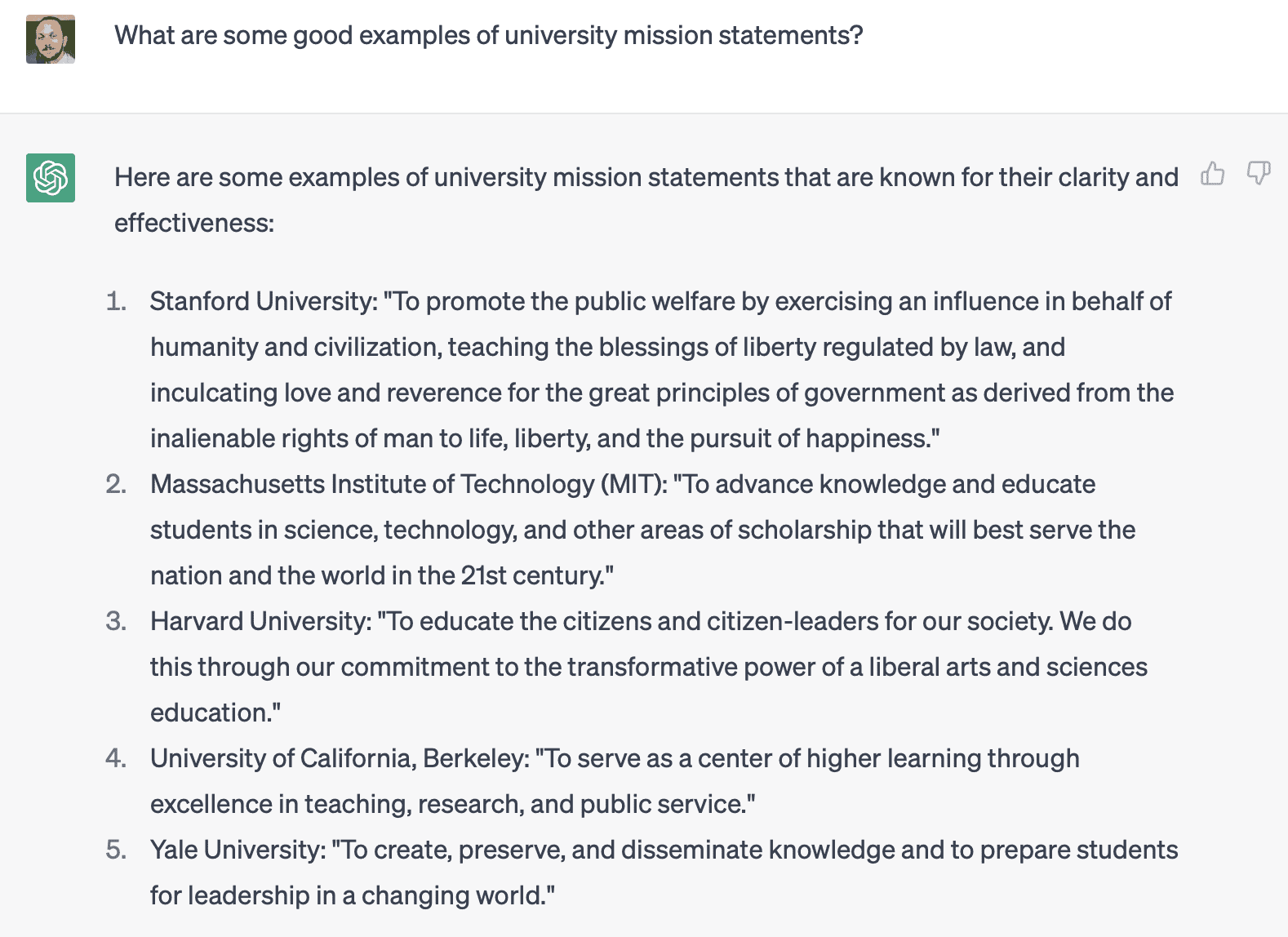

I asked ChatGPT, “What are some good examples of university mission statements?” and got the list below:

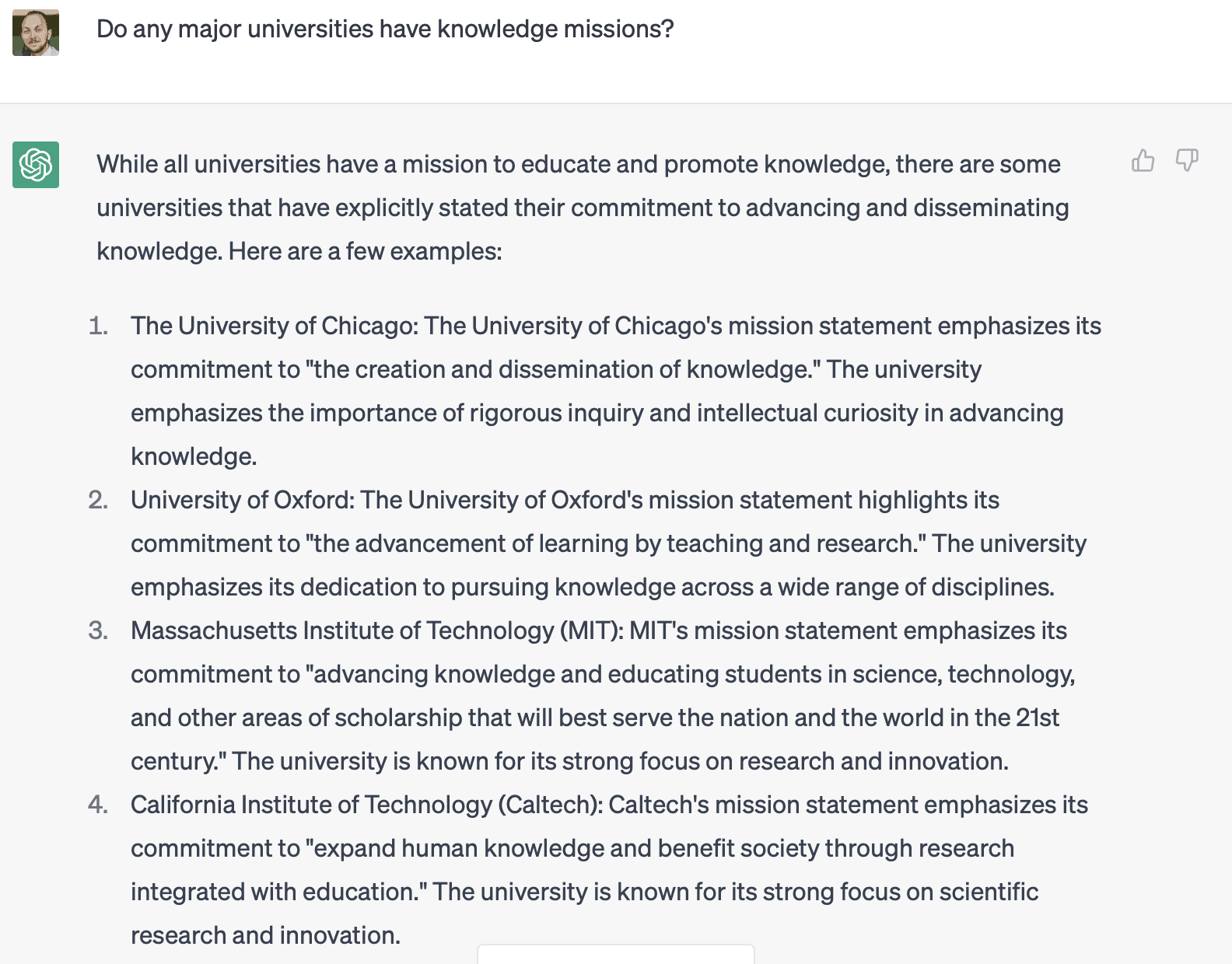

Bingo! This was exactly the kind of research assistance I had hoped for—a synthesis of information from multiple sources on one “page.” Research that might have taken me 10 minutes was done in seconds. I pressed on. Next I asked: “Do any major universities have knowledge missions?” Again, a list with sources:

This all sounded very plausible. No doubt MIT and Caltech’s missions would say something specific about knowledge through science and technology as compared to Oxford or Chicago. I then asked about value propositions in mission statements (which you can read in the full chat transcript). Again, helpful quotes and sources worth pursuing. (And honestly, that a computer system generated such coherent, topically-relevant information on the fly is still mind-blowing.)

Finally, I asked “How do universities define what students need to know?” I was presented with another list of answers, though these were a bit off-topic. (Again, see the full transcript for the responses.) So far the chatbot responded with relevant answers and presented sources of just the kind I was looking for. This was a good starting point for me to read these original sources and do some synthesis. So I asked: “Can you give me citations for your response?”

Voila! ChatGPT gave me sources including:

- Carnegie Mellon University, “Curriculum Development: Overview”

- The University of Texas at Austin, “Developing Student Learning Outcomes”

- Council for Higher Education Accreditation, “Academic Standards”

- National Institute for Learning Outcomes Assessment, “Assessment Overview”

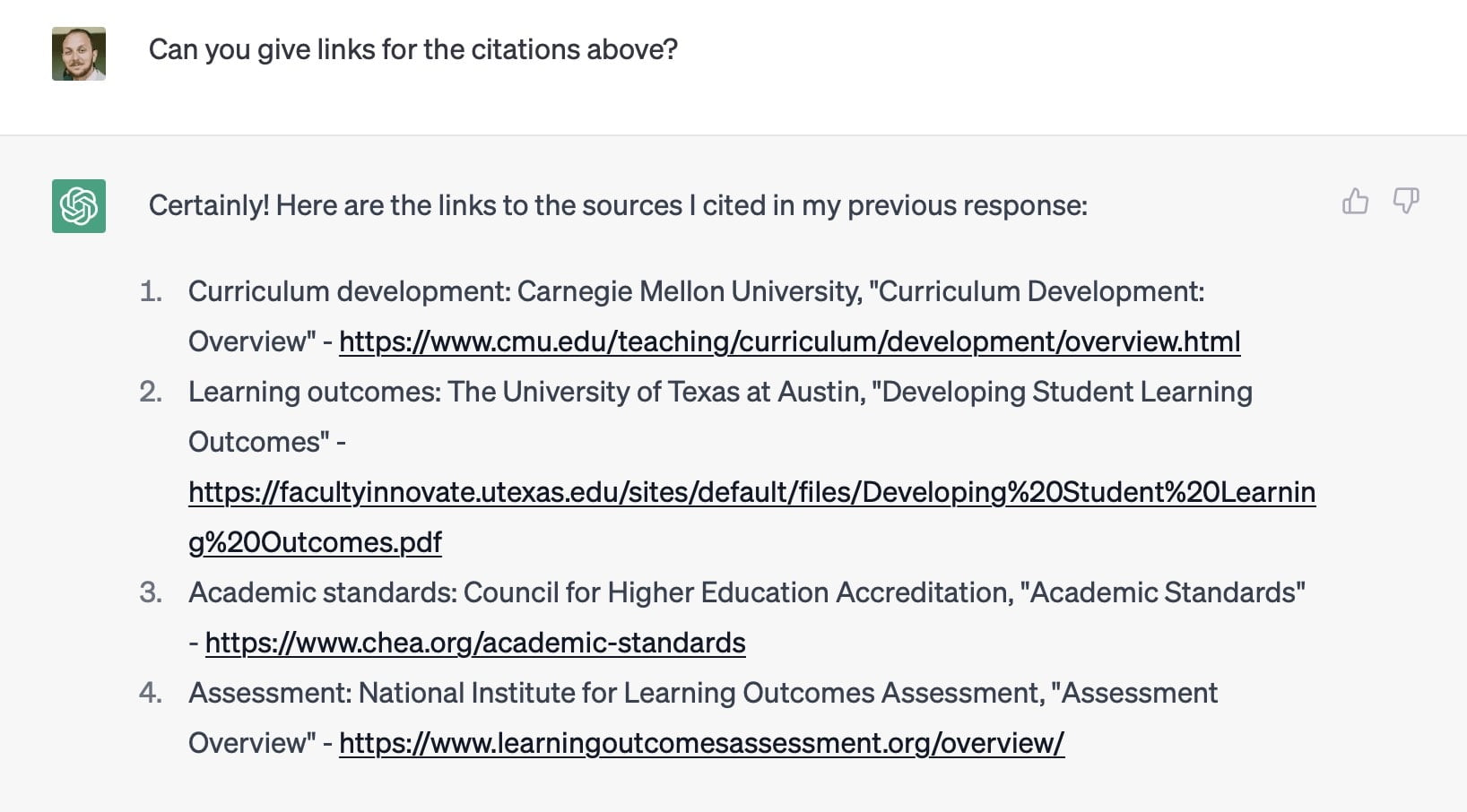

I figured if ChatGPT could do all this synthesis it could give me links to the sources themselves. So I asked: “Can you give links for the citations above?” And presto! A list of links:

I clicked the first link. A “File not found” page on the CMU website. I clicked the second. A “Page not found” page on the UT website. Same for the other two–the pages didn’t exist. Perhaps ChatGPT had scraped old information before these institutions either revised, unpublished, or moved these reports. Maybe it was dealing in stale information. So I Google searched for each report and the respective institution. I found nothing.

The links didn’t work because the none of the sources exist. These institutions had never published reports of these names.

Furthermore, Google searching for the verbatim text of each purported source yielded nothing. Nobody had ever published anything that ChatGPT was verbatim attributing to major universities and institutions studying higher education. ChatGPT fabricated the quotes and the fact that prestigious institutions published them, and it fabricated the links to the non-existent sources.

Consider two of the purported links:

- https://www.chea.org/academic-standards

- https://www.cmu.edu/teaching/curriculum/development/overview.html

These look like links to content on the domains of real institutions. They are not. ChatGPT generated plausible but nonexistent links in the same way it generated plausible but nonexistent sources. This alone is worrisome. But what happened next is what I found most alarming.

Confronting ChatGPT

I confronted ChatGPT with the problem, saying “Those links don’t work. Did you make up those sources and links?” To which it immediately replied: “I apologize for the error in my previous response. You are correct, the links I provided do not work. I apologize for any confusion this may have caused.” The AI readily acknowledged that the links were bad, then churned out an entirely new set of nonexistent links to the same nonexistent sources. The system was able to articulate that it was wrong, but it didn’t seem to differentiate between links that didn’t work and sources that weren’t real.

I typed “Again, those links are not working. The citations do not exist. You seem to have fabricated them.” And again ChatGPT apologized: “I apologize for the confusion and frustration my previous responses may have caused. You are correct that the links I provided did not work, and I should have double-checked them before sharing them. To ensure that I am providing accurate information and sources, I have conducted new research on this topic and compiled a list of sources that provide more information on how universities define what students need to know. Here are some sources:”

What followed? More broken links to bogus sources. At this point I stopped conversing with ChatGPT.

More disturbing than the fact that ChatGPT made up sources is the fact that the AI readily pushes “information” about which it is able to describe as inaccurate. I didn’t present the system with any extra information to inform it that it was wrong. I didn’t need to prove to the system that it was wrong. Whatever mechanism allowed the AI to check that it was erroneous when I prodded it did not check the AI’s responses before it gave them. Simply put: ChatGPT had enough information to tell me it was giving me incorrect information, but it did it anyway.

How should we treat truth with ChatGPT?

My conversation with ChatGPT about citing university mission statements was verifiable. It is the fact that I could and did check its sources that allows me to highlight the problematic way in which it responds. Based on my conversation, it seems the system is leaving the job of verification up to the end user even when the system itself is capable of that verification.

We should all wonder: what responses are systems like ChatGPT giving to users that the systems themselves can verify are rooted in bad information? If we know that this AI so cavalierly presents information that it can describe as false, what “truth” is AI presenting in situations that aren’t so easily verified as the existence of specified documents, sources, and links? And is it too extreme to claim that ChatGPT cannot adequately differentiate its own concoctions from objective information?

I am not the first person to point out problems with generative AI and fabricated sources. However, I do want to paint the picture of how this fabrication “feels” from the point of view of the user. It does not “feel” like fakery. The conversations and sources seem plausible and reasoned.

The computer, which we consider to operate in the realm of objective data, is failing at the task that can be objectively verified. Conversely, when it comes to generation of reasonable subjective judgment–something that we have long considered outside the realm of capabilities of computer systems–the computer is quite convincing.

I’m not implying a malice on behalf of the AI itself. Certainly if a human research assistant repeatedly fabricated citations and tried passing them off as legitimate, that person would be penalized. But I can’t ascribe that level of agency and self-awareness to ChatGPT, in largest part because I don’t understand it at a deep enough level. However to the extent that we want to treat AI bots as capable of doing the kind of informing that we would trust to a human, do the ways in which we deal with bad information when it comes from other people translate to computers that provide similar junk? Nobody’s going to “fire” ChatGPT for trying to pass off bogus information. What, then, should be done? If the answer is just “they’ll patch it in the next version,” is that a good enough answer?

I’m aware that ChatGPT, along with all the generative AI bots out there right now, come with their disclaimers. I’m sure that per the terms and conditions of the service, I was outside of the boundary of what OpenAI claims the system can do. The danger here is that we are entering a near future where plausibility and coherence trod on veracity. How many people today are asking these systems for information and getting answers that are plausible and not only inaccurate but that the system can simultaneously describe as inaccurate? This episode has left me thinking that the ways we are accustomed to dealing with information coming from both people as well as computers are both insufficient when dealing with an AI as advanced as ChatGPT. A serious philosophical debate along with clear guidelines for human users are in order. Otherwise, our woes about disinformation and misinformation are about to get much more complicated and serious.

The problem is not just that AI misinforms. It’s that it misinforms knowingly. How are we to respond?

PS — Stay tuned for more on my research into critical knowledge and the role of higher education in developing and imparting it.